AI NPCs Now Generate Voice Dialogue On The Fly

Bringing NPCs to Life: AI-Driven Voice Dialogue Models for Dynamic In‑Game Interaction Traditionally NPCs in games use scripted dialogue trees limited interaction that often feels...

⏱️ Estimated reading time: 5 min

Latest News

Bringing NPCs to Life: AI-Driven Voice Dialogue Models for Dynamic In‑Game Interaction

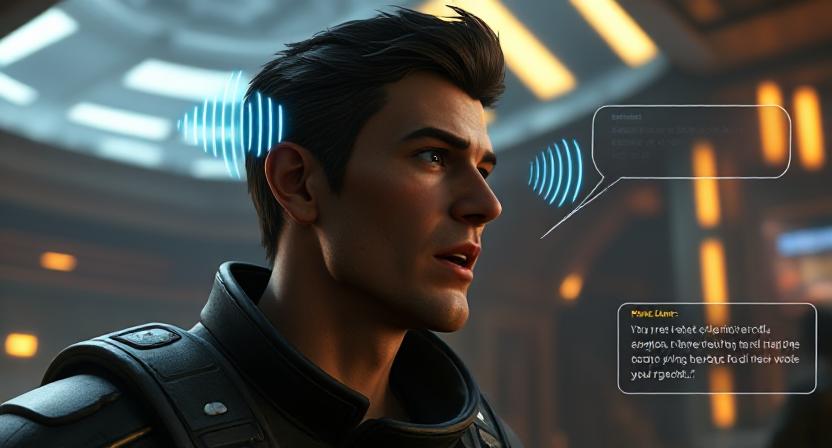

Traditionally NPCs in games use scripted dialogue trees limited interaction that often feels repetitive. In contrast modern AI-driven dialogue systems enable NPCs to respond dynamically in real time to player speech or input. These systems use natural language understanding NLU and text-to-speech TTS pipelines to generate context-aware vocal responses making virtual characters feel alive.

Core Technologies Powering AI Dialogue

Notably platforms like Reelmind.ai Inworld Voice and ElevenLabs now employ emotionally rich TTS systems adjusting tone pacing and pitch inflections to express joy anger sadness or sarcasm.

As a result this expressive voice generation deeply enhances immersion, making characters feel alive compared to older monotone synthetic speech.

Natural Language Processing & Context Awareness

Generative language models e.g. GPT-5 custom conversational engines interpret player inputs-spoken or typed and generate NPC responses aligned with character lore personality and the current narrative context. Some platforms integrate memory systems that track prior conversations player choices and emotional tone across sessions.

Speech-to-Speech & Role Consistency Tools

Beyond TTS speech-to-speech models and persona-aware frameworks like OmniCharacter maintain consistent personality traits and vocal styles-even across branching dialogue paths. Latencies can be as low as 289 ms making voice exchanges feel instantaneous.

Behavioral & Emotional Adaptation

NPCs now adapt responses based on user behavior. Reinforcement learning refines NPC dialogue patterns over time-ensuring they build trust grow hostile or evolve alliances based on player actions. Players consistently report higher replayability and narrative richness from these emergent interactions.

Real-World Deployments and Indie Innovation

Projects like Mantella a mod that integrates Whisper speech-to-text ChatGPT style LLMs and xVASynth (speech synthesis) allow players to speak naturally with NPCs in Skyrim. These NPCs detect game state, maintain conversation memory and evolve personality traits. reelmind.ai,

AAA Studios & Emerging Titles

Major studios like Ubisoft (with its internal Neo NPC project Nvidia with AI NPC toolsets and Jam & Tea Studios Retail Mage are integrating NPC systems that generate dynamic responses tied to player input or environmental context. These create more fluid less mechanical gameplay.

Advantages for Developers and Players

Consequently dynamic voice dialogue makes each playthrough unique NPCs remember prior choices adapt their tone and branch the narrative thus offering deeper interactivity without elaborate scripting.

Personalized Experiences

Notably AI driven NPC personalities not merely scripted dialogue enable truly adaptive in game behavior.

For instance merchants retain memory of past negotiation styles and dynamically adjust prices or tone based on player choices; companions shift their emotional voice and demeanor following conflicts and quest-givers tweak rewards and narrative arcs in response to developing player rapport.

Ultimately these emergent AI systems create gameplay that feels both personalized and responsive liberating designers from rigid scripting while significantly enhancing player immersion.

Challenges & Ethical Considerations

AI could replicate celebrity or actor voices without authorization. Ethical licensing and strict guardrails are essential to avoid misuse. Reelmind and other platforms require explicit consent for cloning.

Representation Bias

Originally: TTS and dialogue models trained on narrow datasets are prone to perpetuating stereotypes thus reinforcing unintentional bias in voice and conversational behavior.

Consequently: this can lead to representational harm disproportionately affecting marginalized or underrepresented groups due to limited demographic or linguistic coverage.

Therefore: it is crucial to employ inclusive training data and diversity‑aware conditioning to mitigate bias and ensure equitable model behavior.

Moreover: techniques such as bias auditing, structured debiasing and representational parity checks are essential for building robust fair dialogue models .

Latency & Processing Constraints

Real time voice generation inevitably requires substantial computational power.

Specifically most production systems aim to cap end‑to‑end voice latency at or below 500 ms a level that remains at the threshold of human perceptual tolerability in fast‑paced games .

However when the voice pipeline isn’t meticulously optimized even minor delays or audio stutters can undermine gameplay fluidity and disrupt player immersion.

Looking Forward: Emerging Directions

Notably newer systems such as OmniCharacter unify speech and personality behavior seamlessly ensuring NPCs maintain vocal traits and character alignment consistently throughout multi-turn interactions.

Crucially latency remains impressively low around 289 ms thus enabling real time responsiveness even in fast paced dialogue settings.

Procedural Narrative Systems (PANGeA)

Moreover by integrating with generative narrative frameworks like PANGeA, NPC dialogue can be procedurally aligned with ongoing story beats and personality traits.

As a result even unpredictable player‑inputs are handled coherently preserving narrative consistency and character identity.

Local LLM and Voice Models in Game Engines

Notably open weight models like Microsoft’s Phi‑3 are now deployable within engines such as Unity.

Accordingly indie developers and modders can embed local LLMs and TTS systems for instance standalone ONNX quantized Phi‑3 binaries to enable seamless offline multi NPC dialogue.

For example Unity packages like LLM for Unity by UndreamAI and CUIfy the XR already support on device inference for multiple NPC agents powered by embedded LLMs STT and TTS all functioning without internet access .

Consequently virtual characters can engage in truly immersive dynamic interactions even in completely offline builds.

Final Thoughts

AI powered dynamic voice NPCs represent a transformative leap for narrative-driven gaming. From independent projects to AAA studios developers are discovering fresh ways to craft immersive worlds where characters remember react and feel human. Dialogue becomes less mechanical and more meaningful.Yet as this technology evolves design responsibility becomes paramount guarding against misuse bias or loss of narrative control. With proper ethical frameworks platforms like Reelmind, Dasha Voice AI Inworld and OmniCharacter pave a path toward more emotionally engaging interactive game worlds.The next generation of NPCs may not just talk they’ll converse with personality memory and emotional intelligence. And that’s where storytelling truly comes alive.

Related Posts

Sakana AI Secures $135M to Advance AI in Japan

Sakana AI Raises $135M to Advance AI in Japan Sakana AI recently secured $135 million...

November 17, 2025

AI Takes the Field Oakland Ballers’ Bold Experiment

Oakland Ballers Bet on AI A Risky Play? The Oakland Ballers a team in the...

September 22, 2025

AI Boom Billion-Dollar Infrastructure Investments

The AI Boom Fueling Growth with Billion-Dollar Infrastructure Deals The artificial intelligence revolution is here...

September 22, 2025

3 Comments

-

user909836

Nice post! 1754786118

Nice post! 1754786118